Gah.

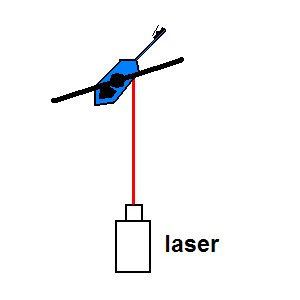

Ok, here's the situation. (err...apologies to Will Smith) We're still at the last point. (see below) Trying to figure out where the !$$@#!# the interceptor is pointing at any given time.

Then it came to me...if the camera is mounted ON the interceptor, we just "aim" by putting any moving object in the middle of the video and ker-pow! Simple, right?

Of course not.

There's a reason I didn't take this approach at the beginning. Because...if the camera is mounted on the interceptor, it's indeed easier to target...but it's a heckofa lot harder to detect a moving object. No simple segmentation of comparing background images to the current image because...

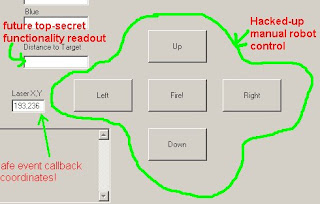

Of course, there are ways to get around this. And given my lack of brainstorms on how to solve my other aiming problem, I've been researching just how hard it'd be to negate camera motion and extract moving objects from a moving camera stream. Because I'm just sure someone has done it..!

In theory, it's pretty simple. (start by google "camera motion", "optical flow"). You just need to identify a series of good tracking points in the image (think: corners, contrast...what we call "video texture") Compare their location from one frame to the next, compare all your vectors, assume that those most common are due to camera motion, and you've got your first part.

Then go back and look for motion vectors that didn't match. Those'd be your "moving object".

See? Simple.

Right.

Beaucoup research has been done on this very thing. As a matter of fact, Intel even made available a c library called OpenCV (subsequently opensourced) that has a bunch of useful libraries for accomplishing this very thing.

Of course, it's not c#. So off we go to see if there's a wrapper/converted API for us poor auto-garbage-collection-addicted fools. And the gods did smile and indeed there are! Unfortunately, neither is under active development nor a complete conversion, but them's the breaks.

OpenCVDotNet got me up and running quickly. Good samples, but a bit slim on documentation.

SharperCV seems to be the more complete of the two, both from a documentation and an available function point of view.

I really did like OpenCVDotNet, but in the end I'm looking to SharperCV. It seems to follow the OpenCV format closer, which makes it easier to translate the C-oriented tutorials and samples easier to c#.

However, before I tear the current source to bits and begin a rebuild, I am going to see if I can get some general videos shot with current performance with a static camera in a light-controlled environment.

All that said, I'm beginning to wonder how much longer I'm going to keep going on this particular direction. At this point, I've accomplished several of the original goals of the project.

Learn C#/use decoupled design/try agile practices

Self grade: B+

I've refactored the inner workings several times. I wrote more, and more elaborate, unit tests for this project than pretty much anything to this point. For my level of expertise, I'm pretty happy with the design as well.

That's realizing I did ok for where I was at...the next project will be held to much higher standards.

Provide a coding example I'm proud of

Self Grade: C

Some of the code is nice. commented. Well reasoned and logical.

And some is..well..spaghetti. Tightly coupled components. Parents requiring somewhat intimate knowledge of the children's inner workings. I did do my best to decouple, but I learned as I went. (that's the nice way to put it!)

Exercise programming problem solving muscles

Self grade: A

The muscles are sore. And I'm not benching a metaphorical 300lb programming stack. But I've certainly re-awakened some of those logical decomposition skills.

Do Something Cool

Self Grade: A- : Well, at least >>I<< think it's pretty cool. However, I had visions of a very fast tracker with ominous voices tracking cubicle visitors. The NXT motors and my design just didn't seem to allow that. I'm sure a better design could have alleviated some of the problems. Hrmmm...the NXTShot sure looks to be more responsive. I may just have to do some "mechanical design analysis" for a Mark III version. ;^)

Next up, videos and pix of current performance...