Well lookie there. It's been one year exactly to the _day_ since my last post.

I didn't plan that. Really. No, seriously.

I finally tore everything apart about 3-4 months ago. The Lego Interceptor is no more. Figured I'd like to actually use the kit for something. So I sighed and reduced it to it's component bits. Thought I'd never actually rebuild it.

But it's coming back. Sort of.

At last night's BSDG session, I wondered idly (out loud) about doing an NXT session at the next codecamp. You know, a little demo on the mindsqualls api, something nifty for the robot to do. (probably involving computer vision/webcamish things). It was generally agreed upon that it'd be cool.

And we talked about CI. And cruisecontrol.net. And ... and ... lightning struck Mr. Brandsma and he had a vision...

Well, no. Not really. But it could have been. What he actually said was "ooh! Oooh! Do a demo and hook your lego interceptor to the CI build and have it fire randomly at the audience when someone breaks the build!!" Kinda like that orb/lavalamp thing. Only more...interactive.

Awesome idea. (and a real crowd pleaser. Assuming I can get it to fire M&M's or something...)

And then I thought "wait a minute...I can read the rotation values of the motors...what if we did a little Mutt & Jeff demo with two developers checking in code...have the robot in a central location...calibrate it to know where the two sit...and when someone breaks the code it announces it to the world and PELTS THE OFFENDER WITH JELLYBEANS!"

This, I think, is brilliance beyond belief.

Now if we can just build it...

Friday, June 06, 2008

Wednesday, June 06, 2007

Whence do I point, Horatio?

The squirrels...they run so inside my head.

Gah.

Ok, here's the situation. (err...apologies to Will Smith) We're still at the last point. (see below) Trying to figure out where the !$$@#!# the interceptor is pointing at any given time.

Then it came to me...if the camera is mounted ON the interceptor, we just "aim" by putting any moving object in the middle of the video and ker-pow! Simple, right?

Of course not.

There's a reason I didn't take this approach at the beginning. Because...if the camera is mounted on the interceptor, it's indeed easier to target...but it's a heckofa lot harder to detect a moving object. No simple segmentation of comparing background images to the current image because... the background is constantly changing!

Of course, there are ways to get around this. And given my lack of brainstorms on how to solve my other aiming problem, I've been researching just how hard it'd be to negate camera motion and extract moving objects from a moving camera stream. Because I'm just sure someone has done it..!

In theory, it's pretty simple. (start by google "camera motion", "optical flow"). You just need to identify a series of good tracking points in the image (think: corners, contrast...what we call "video texture") Compare their location from one frame to the next, compare all your vectors, assume that those most common are due to camera motion, and you've got your first part.

Then go back and look for motion vectors that didn't match. Those'd be your "moving object".

See? Simple.

Right.

Beaucoup research has been done on this very thing. As a matter of fact, Intel even made available a c library called OpenCV (subsequently opensourced) that has a bunch of useful libraries for accomplishing this very thing.

Of course, it's not c#. So off we go to see if there's a wrapper/converted API for us poor auto-garbage-collection-addicted fools. And the gods did smile and indeed there are! Unfortunately, neither is under active development nor a complete conversion, but them's the breaks.

OpenCVDotNet got me up and running quickly. Good samples, but a bit slim on documentation.

SharperCV seems to be the more complete of the two, both from a documentation and an available function point of view.

I really did like OpenCVDotNet, but in the end I'm looking to SharperCV. It seems to follow the OpenCV format closer, which makes it easier to translate the C-oriented tutorials and samples easier to c#.

However, before I tear the current source to bits and begin a rebuild, I am going to see if I can get some general videos shot with current performance with a static camera in a light-controlled environment.

All that said, I'm beginning to wonder how much longer I'm going to keep going on this particular direction. At this point, I've accomplished several of the original goals of the project.

Learn C#/use decoupled design/try agile practices

Self grade: B+

I've refactored the inner workings several times. I wrote more, and more elaborate, unit tests for this project than pretty much anything to this point. For my level of expertise, I'm pretty happy with the design as well.

That's realizing I did ok for where I was at...the next project will be held to much higher standards.

Provide a coding example I'm proud of

Self Grade: C

Some of the code is nice. commented. Well reasoned and logical.

And some is..well..spaghetti. Tightly coupled components. Parents requiring somewhat intimate knowledge of the children's inner workings. I did do my best to decouple, but I learned as I went. (that's the nice way to put it!)

Exercise programming problem solving muscles

Self grade: A

The muscles are sore. And I'm not benching a metaphorical 300lb programming stack. But I've certainly re-awakened some of those logical decomposition skills.

Do Something Cool

Self Grade: A- : Well, at least >>I<< think it's pretty cool. However, I had visions of a very fast tracker with ominous voices tracking cubicle visitors. The NXT motors and my design just didn't seem to allow that. I'm sure a better design could have alleviated some of the problems. Hrmmm...the NXTShot sure looks to be more responsive. I may just have to do some "mechanical design analysis" for a Mark III version. ;^)

Next up, videos and pix of current performance...

Gah.

Ok, here's the situation. (err...apologies to Will Smith) We're still at the last point. (see below) Trying to figure out where the !$$@#!# the interceptor is pointing at any given time.

Then it came to me...if the camera is mounted ON the interceptor, we just "aim" by putting any moving object in the middle of the video and ker-pow! Simple, right?

Of course not.

There's a reason I didn't take this approach at the beginning. Because...if the camera is mounted on the interceptor, it's indeed easier to target...but it's a heckofa lot harder to detect a moving object. No simple segmentation of comparing background images to the current image because...

Of course, there are ways to get around this. And given my lack of brainstorms on how to solve my other aiming problem, I've been researching just how hard it'd be to negate camera motion and extract moving objects from a moving camera stream. Because I'm just sure someone has done it..!

In theory, it's pretty simple. (start by google "camera motion", "optical flow"). You just need to identify a series of good tracking points in the image (think: corners, contrast...what we call "video texture") Compare their location from one frame to the next, compare all your vectors, assume that those most common are due to camera motion, and you've got your first part.

Then go back and look for motion vectors that didn't match. Those'd be your "moving object".

See? Simple.

Right.

Beaucoup research has been done on this very thing. As a matter of fact, Intel even made available a c library called OpenCV (subsequently opensourced) that has a bunch of useful libraries for accomplishing this very thing.

Of course, it's not c#. So off we go to see if there's a wrapper/converted API for us poor auto-garbage-collection-addicted fools. And the gods did smile and indeed there are! Unfortunately, neither is under active development nor a complete conversion, but them's the breaks.

OpenCVDotNet got me up and running quickly. Good samples, but a bit slim on documentation.

SharperCV seems to be the more complete of the two, both from a documentation and an available function point of view.

I really did like OpenCVDotNet, but in the end I'm looking to SharperCV. It seems to follow the OpenCV format closer, which makes it easier to translate the C-oriented tutorials and samples easier to c#.

However, before I tear the current source to bits and begin a rebuild, I am going to see if I can get some general videos shot with current performance with a static camera in a light-controlled environment.

All that said, I'm beginning to wonder how much longer I'm going to keep going on this particular direction. At this point, I've accomplished several of the original goals of the project.

Learn C#/use decoupled design/try agile practices

Self grade: B+

I've refactored the inner workings several times. I wrote more, and more elaborate, unit tests for this project than pretty much anything to this point. For my level of expertise, I'm pretty happy with the design as well.

That's realizing I did ok for where I was at...the next project will be held to much higher standards.

Provide a coding example I'm proud of

Self Grade: C

Some of the code is nice. commented. Well reasoned and logical.

And some is..well..spaghetti. Tightly coupled components. Parents requiring somewhat intimate knowledge of the children's inner workings. I did do my best to decouple, but I learned as I went. (that's the nice way to put it!)

Exercise programming problem solving muscles

Self grade: A

The muscles are sore. And I'm not benching a metaphorical 300lb programming stack. But I've certainly re-awakened some of those logical decomposition skills.

Do Something Cool

Self Grade: A- : Well, at least >>I<< think it's pretty cool. However, I had visions of a very fast tracker with ominous voices tracking cubicle visitors. The NXT motors and my design just didn't seem to allow that. I'm sure a better design could have alleviated some of the problems. Hrmmm...the NXTShot sure looks to be more responsive. I may just have to do some "mechanical design analysis" for a Mark III version. ;^)

Next up, videos and pix of current performance...

Friday, May 18, 2007

Picking up where we left off...

When you last left your intrepid hero, things were looking up. The system was back up and functional. Das blinkinlights were doing their thing. Life seemed grand.

And then reality.

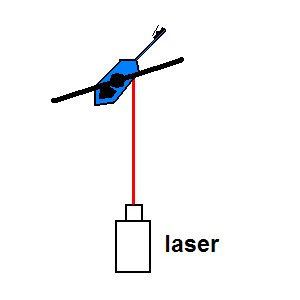

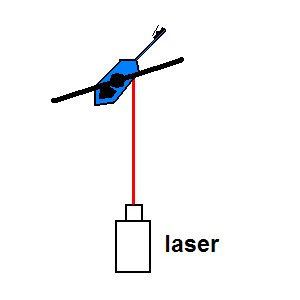

I hooked everything up, plugged it all in, and remounted the targeting laser. In a fit of brilliant insanity, I then fired up the control program and told the kids to build some lego towers to shoot down.

They oblidged.

And all heck broke loose.

You see...when I hot-glued the targeting laser to the lego beam, it was kinda-sorta fudged on. "Gee, that looks pretty straight" sorta thing.

Well, it wasn't. From 24" away, the interceptor was landing missles at least 2-3" off where the laser was pointing. Not good when the kids are so proud of themselves for actually getting the laser on the target and then the arrow has the GALL to land somewhere off yonder.

I gave everybody and extra turn, and promised to "fix the robot".

Then I scratched my head. However am I going to "calibrate" this laser?!! It's hotglued on fer pete's sake. Long story short, I tried a bunch of lego jigs, but nothing worked quite right. So I resigned myself to removing and re-gluing.

Of course, this necessitated some sort of aligning jig. In a fit of inspiration (I have them often. Fits, that is. Inspiration more occasionally.) I envisioned some sort of calibration jig.

Mounted the laser. Shined it on the brick. Hotglued everything in place.

Ok, that's on the mechanical side. What about on the software side?

I've been ruminating on how to keep a solid lock on the laser from the camera. Camera noise (spurious RGB values from the cheap webcam) have been somewhat of a problem. As well, the inherent nature of 160x120 video (never did solve that) has been an issue.

So I did what any self-respecting engineer would do. I upped the minimum hardware requirements. ;^)

I have an older DV camcorder that will only function as a camera, now. So I bought an inexpensive 1394 PC card from newegg, paired it up with the sony dv camcorder, and now I get BEAUTIFUL CLEAR pictures! Better optics, less noise, AND I can turn off the auto-exposure/brightness in cam. Sweet.

Of course, that's all fine and good...but we still have the "oblique laser" problem.

If the target surface is at a nice 90 degrees to the laser, we get the standard bright red dot.

HOWEVER...if the target is at an oblique angle...

The laser's beam is spread out in a "puddle", and it's overall less noticeable, and likely not the brightest spot.

I had an epiphany today. (I promise, the point is coming soon!)

I happened across "how stuff works" page on the apache helicopter's hellfire missiles. (mmm...missiles) And I was inspired by the targeting method. Apparently, the older hellfires were laser targeted where the missile would seek to whatever target was being painted by the laser. But not just any laser...

A laser that pulsed to a pre-determined code. (that was downloaded to the missile prior to launch)

"Ah-HA!" I thought. If I can somehow parse out the laser (maybe it's got unique HSV values?!!) and match what I think the target point is against some sort of pulse pattern...hmm...

Thinking...thinking...

And then reality.

I hooked everything up, plugged it all in, and remounted the targeting laser. In a fit of brilliant insanity, I then fired up the control program and told the kids to build some lego towers to shoot down.

They oblidged.

And all heck broke loose.

You see...when I hot-glued the targeting laser to the lego beam, it was kinda-sorta fudged on. "Gee, that looks pretty straight" sorta thing.

Well, it wasn't. From 24" away, the interceptor was landing missles at least 2-3" off where the laser was pointing. Not good when the kids are so proud of themselves for actually getting the laser on the target and then the arrow has the GALL to land somewhere off yonder.

I gave everybody and extra turn, and promised to "fix the robot".

Then I scratched my head. However am I going to "calibrate" this laser?!! It's hotglued on fer pete's sake. Long story short, I tried a bunch of lego jigs, but nothing worked quite right. So I resigned myself to removing and re-gluing.

Of course, this necessitated some sort of aligning jig. In a fit of inspiration (I have them often. Fits, that is. Inspiration more occasionally.) I envisioned some sort of calibration jig.

Mounted the laser. Shined it on the brick. Hotglued everything in place.

Ok, that's on the mechanical side. What about on the software side?

I've been ruminating on how to keep a solid lock on the laser from the camera. Camera noise (spurious RGB values from the cheap webcam) have been somewhat of a problem. As well, the inherent nature of 160x120 video (never did solve that) has been an issue.

So I did what any self-respecting engineer would do. I upped the minimum hardware requirements. ;^)

I have an older DV camcorder that will only function as a camera, now. So I bought an inexpensive 1394 PC card from newegg, paired it up with the sony dv camcorder, and now I get BEAUTIFUL CLEAR pictures! Better optics, less noise, AND I can turn off the auto-exposure/brightness in cam. Sweet.

Of course, that's all fine and good...but we still have the "oblique laser" problem.

If the target surface is at a nice 90 degrees to the laser, we get the standard bright red dot.

HOWEVER...if the target is at an oblique angle...

The laser's beam is spread out in a "puddle", and it's overall less noticeable, and likely not the brightest spot.

I had an epiphany today. (I promise, the point is coming soon!)

I happened across "how stuff works" page on the apache helicopter's hellfire missiles. (mmm...missiles) And I was inspired by the targeting method. Apparently, the older hellfires were laser targeted where the missile would seek to whatever target was being painted by the laser. But not just any laser...

A laser that pulsed to a pre-determined code. (that was downloaded to the missile prior to launch)

"Ah-HA!" I thought. If I can somehow parse out the laser (maybe it's got unique HSV values?!!) and match what I think the target point is against some sort of pulse pattern...hmm...

Thinking...thinking...

Friday, April 13, 2007

The pricewars begin...

Ok, now I understand how all those ebay-ers can sell NXT's for a "buy it now!" price of $220 and make money...

Not only is CompUSA selling the NXT for $199, but now Best Buy is doing it for $189 + $6 shipping. (thanks NXT Step!)

Woot!

Those wild thoughts of buying a second and third set are getting correspondingly more tempting... =^)

Not only is CompUSA selling the NXT for $199, but now Best Buy is doing it for $189 + $6 shipping. (thanks NXT Step!)

Woot!

Those wild thoughts of buying a second and third set are getting correspondingly more tempting... =^)

Wednesday, April 11, 2007

Trackin fool

Refactoring to allow for testing is sooooo a good thing.

I finally had the brainstorm. I'd been developing two separate projects for this. One is down-n-dirty, get 'er in. Test it out. Oops..that's ugly but it works.

And one "nice, pristine, how it's supposed-to-be".

I'll let you guess which one actually made progress. For a while.

The pretty one was essentially used as a testing ground for nunit. And therefore has a nice bunch of unit tests.

And then it happened in the "real" project. Ka-blam. Hit a wall. Why isn't this working?!! It should work. The logic is right. Those d!$#m gremlins are getting in between the parsing and compiling stages. I just know it.

Deep breath. Back off. Put the mouse down. Take a walk.

Then it hits me. I'm having so much trouble (partially) because I'm having to do so much crufty liberal insertion of system.diagnostic.debug.writeline.

(true confessions, here)

And it's a PITA to debug. Because...well...things are so tightly coupled I'm not quite sure WHERE to put in the debug statements and even when I do I'm making assumptions about other pieces working and that leads to...

Well, we all know what happens when you assume.

So in a fit of brilliantly obvious inspiration, I did two things.

1.) I ported the unit tests over to the "really/working but somewhat crufty" implementation.

2.) Did some decoupling. Specifically there were two pieces:

The third had already been isolated. But I was doing 1 and 2 in the same function. I pulled them apart. Now a parent function says "where should we go?" and the fxn returns a "left, down" or somesuch.

NOW we're cookin. Unit tests were written for each of 4 quadrants, like so:

// $ = target point (where to move to)

//

// --------------------* < -100,100 (max rot values)

// | quad 1 | quad 2 |

// | | |

// | | |

// ----------$----------

// | quad 3 | quad 4 |

// | | |

// | | |

// *--------------------

// ^(100,-75) min rot values

And as the french say, "voilia!" (literally, "let us eat cheese!")

In the end all was happy. Except tracking was much less precise than I'd like. Gear slop and imprecision in initial calibration were affecting things far more than I'd like.

So...where are we at?

The laser tracking worked best for precision...but it suffered from light refractions and went somewhat nuts in anything but a nice, lowlight environment. Oh, and if the beam was scattered or at an angle (think: shining a laser pointer on a table at a steep angle), things went wonky. Chasing butterflies.

Dead reckoning isn't particularly precise...but it's kinda/sorta "in the neighborhood".

Can I combine the two? Maybe limit the search radius for the laser pointer to within x pixels of the estimated dead reckoning solution? It's a thought.

But regardless, it'll be integrated and tested. =^)

In Mechanical news...

The gearing has been reduced and reworked for both pan and tilt. Of course, this adds to the gearlash problem, but it was necessary for precision reasons.

("Slow 'er down!" everyone said.)

(Well, not everyone.)

(Ok, maybe just me.)

Anyway.

But it's working. Back to "das blinkinlights" working.

But now we've got

Ok. Go to it.

I finally had the brainstorm. I'd been developing two separate projects for this. One is down-n-dirty, get 'er in. Test it out. Oops..that's ugly but it works.

And one "nice, pristine, how it's supposed-to-be".

I'll let you guess which one actually made progress. For a while.

The pretty one was essentially used as a testing ground for nunit. And therefore has a nice bunch of unit tests.

And then it happened in the "real" project. Ka-blam. Hit a wall. Why isn't this working?!! It should work. The logic is right. Those d!$#m gremlins are getting in between the parsing and compiling stages. I just know it.

Deep breath. Back off. Put the mouse down. Take a walk.

Then it hits me. I'm having so much trouble (partially) because I'm having to do so much crufty liberal insertion of system.diagnostic.debug.writeline.

(true confessions, here)

And it's a PITA to debug. Because...well...things are so tightly coupled I'm not quite sure WHERE to put in the debug statements and even when I do I'm making assumptions about other pieces working and that leads to...

Well, we all know what happens when you assume.

So in a fit of brilliantly obvious inspiration, I did two things.

1.) I ported the unit tests over to the "really/working but somewhat crufty" implementation.

2.) Did some decoupling. Specifically there were two pieces:

- determine which way way I'm supposed to go based on where I am and the target is

- issue a command to move the robot ( myrobot.moveright() )

- translate the moveright into actual motor directions (move motor b @ 70% power in the left direction)

The third had already been isolated. But I was doing 1 and 2 in the same function. I pulled them apart. Now a parent function says "where should we go?" and the fxn returns a "left, down" or somesuch.

NOW we're cookin. Unit tests were written for each of 4 quadrants, like so:

// $ = target point (where to move to)

//

// --------------------* < -100,100 (max rot values)

// | quad 1 | quad 2 |

// | | |

// | | |

// ----------$----------

// | quad 3 | quad 4 |

// | | |

// | | |

// *--------------------

// ^(100,-75) min rot values

And as the french say, "voilia!" (literally, "let us eat cheese!")

In the end all was happy. Except tracking was much less precise than I'd like. Gear slop and imprecision in initial calibration were affecting things far more than I'd like.

So...where are we at?

The laser tracking worked best for precision...but it suffered from light refractions and went somewhat nuts in anything but a nice, lowlight environment. Oh, and if the beam was scattered or at an angle (think: shining a laser pointer on a table at a steep angle), things went wonky. Chasing butterflies.

Dead reckoning isn't particularly precise...but it's kinda/sorta "in the neighborhood".

Can I combine the two? Maybe limit the search radius for the laser pointer to within x pixels of the estimated dead reckoning solution? It's a thought.

But regardless, it'll be integrated and tested. =^)

In Mechanical news...

The gearing has been reduced and reworked for both pan and tilt. Of course, this adds to the gearlash problem, but it was necessary for precision reasons.

("Slow 'er down!" everyone said.)

(Well, not everyone.)

(Ok, maybe just me.)

Anyway.

But it's working. Back to "das blinkinlights" working.

But now we've got

- boundary checking (no more chasing butterflies and grinding gears)

- Four shot rotary magazine

- Smaller tilt cradle footprint (geez. I should be in marketing. No...no, actually. I shouldn't)

- A cool "fah-WOOSH!" sound when the missile is fired. (put that in for the kids)

Ok. Go to it.

Wednesday, April 04, 2007

Avast ye skirvy dogs, protect the staplers at all costs!

...because...you know...that's about the highest aspiration I have for this once it's done.

Oh, and it'll look cool. And I'll have the undying admiration of my coworkers. Some of them. A few. Ok, at least one.

In other news, a reader (from whence he came I know not..!) pointed me to a project he's working on.

In other news, a reader (from whence he came I know not..!) pointed me to a project he's working on.

http://www.foxbox.nl/lego/index.asp?FRMid=39

Check it out! Similar to JP Brown's Aegis, but with his own unique execution...and he's got pictures...LOTS of pictures, and even a few videos.

Actually, check the site out even if you're not interested in this. This guy rivals Philo for the pure amount-of-stuff that he's built and documented. I particularly liked the automated battery tester.

In general construction news...

Given my own...umm...unique execution of the pan-n-tilt, I may have to crib some of these guys' executions. If nothing else, I'm thinking of rebuilding the firing mechanism with a conventional lego motor for compactness.

Of course, then I lose the ability to control rotation via the built in rotation sensors...drat drat drat...everything's a tradeoff. Hmm. Maybe I'll just attempt to rebuild using the nxt motor and trying to move things more inboard...

In other news, the dead reckoning method for moving stuff around is coming...well...it's coming...umm...yeah.

After more than a bit of headscratching the boundary detection is working. That is, I can calibrate the "aim box" for the beast (max and min horizontal and vertical rotations) and if we start to point outside of those boundaries, the control code catches it and moves back inside.

However, I had two setbacks when translating this to dead reckoning movement.

1.) I've got a @#$!$ bug in the code. Currently whenever I tell it to "track to a point!" it's thinking that the point is somewhere between it's toes and left armpit. Ie, it moves left and down...left and down...always left and down. I've stared at the code till my eyes bugged out and the logic error is still eluding me. But I'll find and squash it. (eventually)

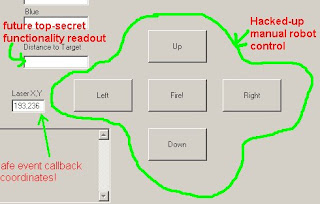

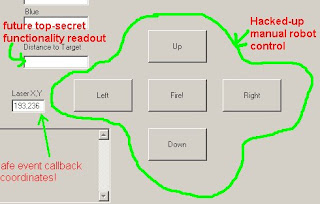

2.) I..umm...err. (this is really embarrassing) I lost my control buttons. Yeah, these:

I was playing with tab layout controls, and I think somewhere I grabbed and moved them. Somewhere. As a group.

And now while Visual Studio thinks they're there (and all the handlers/etc are still present) they are naught to be found on the form.

Of course my (ever bright, savvy, and non-developer) wife's perspective was: "You have backups, right? You keep versions don't you?"

Argh! All that ranting and raving about idiots who code without source control comes crashing down upon my head...she must have actually been listening.

Yes, I do have a previous version in subversion. And it's only about 2-3 days old. But there's still quite a bit of difference 'tween the two.

The painful lessons are the ones best learned. (and this could have been MUCH more painful!)

Cheerio!

-aaron

Oh, and it'll look cool. And I'll have the undying admiration of my coworkers. Some of them. A few. Ok, at least one.

In other news, a reader (from whence he came I know not..!) pointed me to a project he's working on.

In other news, a reader (from whence he came I know not..!) pointed me to a project he's working on.http://www.foxbox.nl/lego/index.asp?FRMid=39

Check it out! Similar to JP Brown's Aegis, but with his own unique execution...and he's got pictures...LOTS of pictures, and even a few videos.

Actually, check the site out even if you're not interested in this. This guy rivals Philo for the pure amount-of-stuff that he's built and documented. I particularly liked the automated battery tester.

In general construction news...

Given my own...umm...unique execution of the pan-n-tilt, I may have to crib some of these guys' executions. If nothing else, I'm thinking of rebuilding the firing mechanism with a conventional lego motor for compactness.

Of course, then I lose the ability to control rotation via the built in rotation sensors...drat drat drat...everything's a tradeoff. Hmm. Maybe I'll just attempt to rebuild using the nxt motor and trying to move things more inboard...

In other news, the dead reckoning method for moving stuff around is coming...well...it's coming...umm...yeah.

After more than a bit of headscratching the boundary detection is working. That is, I can calibrate the "aim box" for the beast (max and min horizontal and vertical rotations) and if we start to point outside of those boundaries, the control code catches it and moves back inside.

However, I had two setbacks when translating this to dead reckoning movement.

1.) I've got a @#$!$ bug in the code. Currently whenever I tell it to "track to a point!" it's thinking that the point is somewhere between it's toes and left armpit. Ie, it moves left and down...left and down...always left and down. I've stared at the code till my eyes bugged out and the logic error is still eluding me. But I'll find and squash it. (eventually)

2.) I..umm...err. (this is really embarrassing) I lost my control buttons. Yeah, these:

I was playing with tab layout controls, and I think somewhere I grabbed and moved them. Somewhere. As a group.

And now while Visual Studio thinks they're there (and all the handlers/etc are still present) they are naught to be found on the form.

Of course my (ever bright, savvy, and non-developer) wife's perspective was: "You have backups, right? You keep versions don't you?"

Argh! All that ranting and raving about idiots who code without source control comes crashing down upon my head...she must have actually been listening.

Yes, I do have a previous version in subversion. And it's only about 2-3 days old. But there's still quite a bit of difference 'tween the two.

The painful lessons are the ones best learned. (and this could have been MUCH more painful!)

Cheerio!

-aaron

Tuesday, April 03, 2007

Mindstorms NXT $199 @ CompUSA!

I just happened to be at my local CompUSA about 2 weeks ago looking for (oh the irony!) an inexpensive USB joystick to control the robot.

Low and behold...in the same aisle, facing shelves on the bottom were [da dum!] Mindstorms NXT kits. "Nifty enough", says I, "but what's that little sticker..."

Holy hot hannah batman...$199! (the sticker said "new low price!")

The link above is to the online store, which also reflects the $199 price. Alas, they are all sold out for delivery. However, my (local) store has some in...if you have local CompUSA you might just be as lucky!

I've been itching for a second set already...mostly because I keep getting inspired by other folks' creations and want to give 'em a try without tearing apart my work-in-progress. Oh the agony, your name is Lego...

And the joystick? Eh...didn't buy one...yet. I'll tackle DirectInput and the attendant control issues later.

Subscribe to:

Comments (Atom)